One of the most used and understood statistical algorithm is linear regression.

Wikipedia has a very simple definition- In statistics, linear regression is a linear approach to modelling the relationship between a scalar response (or dependent variable) and one or more explanatory variables (or independent variables). The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. Mathematically also a very simple formula can describe this model, y= Xb + e; where Y is the dependent variable and X is the independent variable, with e is the error or intercept.

Let’s take an example of a situation where we can use linear regression to predict the price of a house based on its features. The target feature is the price on which it is sold, the independent features are #bedrooms, #bathrooms, sqft_living, sqft_lot, floors, waterfront, view, condition, sqft_basement, year_built, year_renovated. These are the tangible features which we can record in one way or another. Applying the linear regression library is quite simple in python.

# Create linear regression object regr = linear_model.LinearRegression() # Train the model using the training sets regr.fit(X_train, y_train) # Make predictions using the testing set y_pred = regr.predict(X_test)

This piece of code is sufficient enough to generate a model for you that predicts the price of the house. But if it is so simple why is this one of the most common kaggle competition?

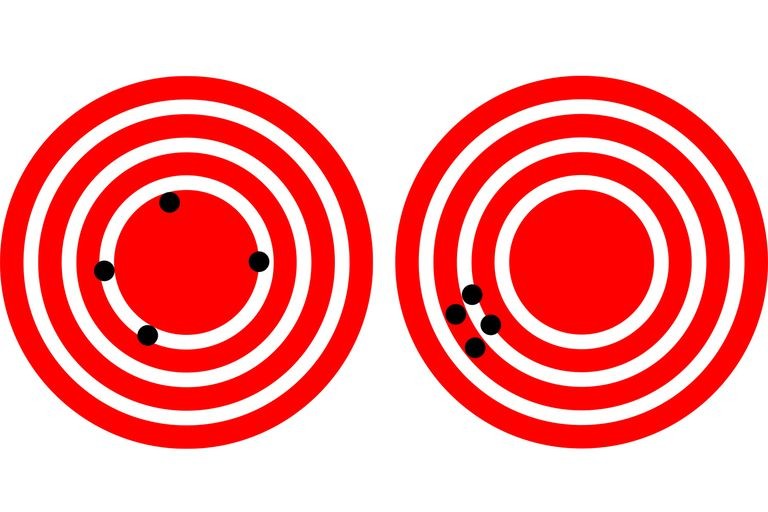

When we perform the basic regression we more often than not find that the model is not accurate to our needs and so we want to improve the model as much as possible. This is no small feat. In the next part I will list down and explain some of the ways in which the linear regression model can be improved. But before going forward let’s talk about the measures of model performance, there are number of measures that can be used to evaluate a linear regression. a) Goodness of fit is one of the most commonly used measure, this is the R-squared value (coefficient of determination). higher the value of R-square, better the model. But this is not always the case because there are many scenarios where this does not hold true. b) Graphical residual Analysis is one of the most reliable and accurate way to evaluate a model’s performance. Looks something like this.

Steps of improve the Linear Regression model performance:

- Clean the data: This is a very time consuming process but a data set that has been cleaned properly is one the best ways to improve the model accuracy. This involves outlier analysis, extrapolation, imputing, handling missing values. Deciding what do with outlier and missing values depends entirely on the data set thus simply removing them would not be the best solution.

- Normalize the data: The first step begins before we model the data, this is exploratory data analysis, where we check if the data is skewed. A skewed dataset would look something like this.

Our goal is to bring the mean towards 0 and distribute the standard deviation normally (remember bell curve). The python library for normalizing is StandardScaler.

from sklearn.preprocessing import StandardScaler scaler = StandardScaler()

3. Handle categorical variables: Categorical are not good for linear regression because they are not continuous. The efficient way to handle them is to encode them using any of the python library. One of the popular one is oneHotEncoding.

4. Remove unwanted features: There are some features that are not very useful in the data, carrying them along is useless. We can use the corr() function to identify the most important features and keep them in the model.

5. PCA: Principal component Analysis is performing orthogonal transformations on a set of features that are correlated into a set of uncorrelated features. These uncorrelated features are called principal component. Although there is slight risk because PCA is sensitive to initial scaling.

6. Regularization: This technique involves some math, ridge regression is one of the regularization that is used frequently. This analyzes the multi-collinearity that features have among themselves. Multi collinearity is the existence of relationships between independent variables, this is quite a situations and requires some heavy statistics to solve.

And if you perform these steps accurately you might get a model which fits well.

RELATED POSTS

View all